Jon Toor, CMO, Cloudian

Jon Toor, CMO, Cloudian

Cloud computing and on-prem computing will always co-exist, we believe. A recent article from the venture capital firm Andreesen Horowitz makes a compelling case for that. The article (“The Cost of Cloud, a Trillion Dollar Paradox”) examined the impact of public cloud costs on public company financials and found that they reduce the total market value for those companies using cloud at scale by at least $500 billion.

Here are some of the article’s key findings:

- “If you’re operating at scale, the cost of cloud can at least double your infrastructure bill.”: The authors note that public cloud list prices can be 10-12X the cost of running your own data centers. Although use-commitment and volume discounts can reduce the difference, the cloud is still significantly more expensive.

- “Some companies we spoke with reported that they exceeded their committed cloud spend forecast by at least 2X.” Cloud spend can be hard to predict, resulting in spending that often exceeds plan. Companies surveyed for the article indicate that actual spend is often 20% higher than committed spend and at least 2X in some cases.

- “Repatriation results in one-third to one-half the cost of running equivalent workloads in the cloud.”: This takes into account the TCO of everything from server racks, real estate, and cooling to network and engineering costs.

- “The cost of cloud ‘takes over’ at some point, locking up hundreds of billions of market cap that are now stuck in this paradox: You’re crazy if you don’t start in the cloud; you’re crazy if you stay on it.”: While public cloud delivers on its promise early on, as a company scales and its growth slows, the impact of cloud spend on margins can start to outweigh the benefits. Because this shift happens later in a company’s life, it’s difficult to reverse.

- “Think about repatriation upfront.” By the time cloud costs start to catch up to or even outpace revenue growth, it’s too late. Even modest or modular architectural investment early on reduces the work needed to repatriate workloads in the future. In addition, repatriation can be done incrementally, and in a hybrid fashion.

- “Companies need to optimize early, often, and, sometimes, also outside the cloud.”: When evaluating the value of any business, one of the most important factors is the cost of goods sold (COGS). That means infrastructure optimization is key.

- “The popularity of Kubernetes and the containerization of software, which makes workloads more portable, was in part a reaction to companies not wanting to be locked into a specific cloud.”: Developers faced with larger-than-expected cloud bills have become more savvy about the need for greater rigor when it comes to cloud spend.

- “For large companies — including startups as they reach scale — that [cloud flexibility] tax equates to hundreds of billions of dollars of equity value in many cases.”: This tax is levied long after the companies have committed themselves to the cloud. However, one of the primary reasons organizations have moved to the cloud early on – avoiding large CAPEX outlays – is no longer limited to public clouds. There are now data center alternatives that can be built, deployed, and managed entirely as OPEX.

In short, the article highlights the need to think carefully about which use cases are better suited for on-prem deployment. Public cloud can provide flexibility and scalability benefits, but at a cost that can significantly impact your company’s financial performance.

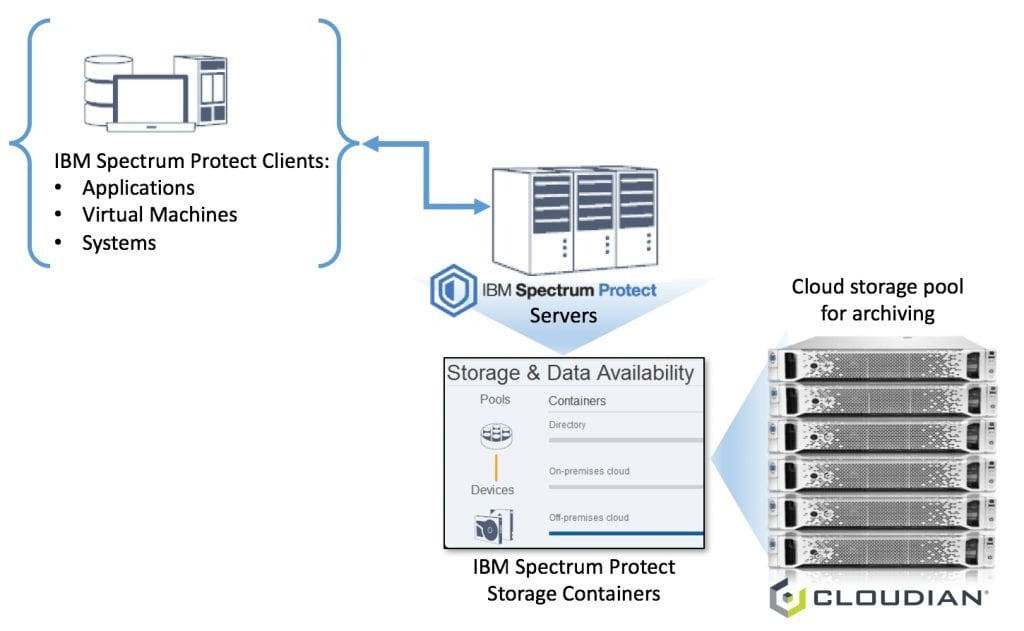

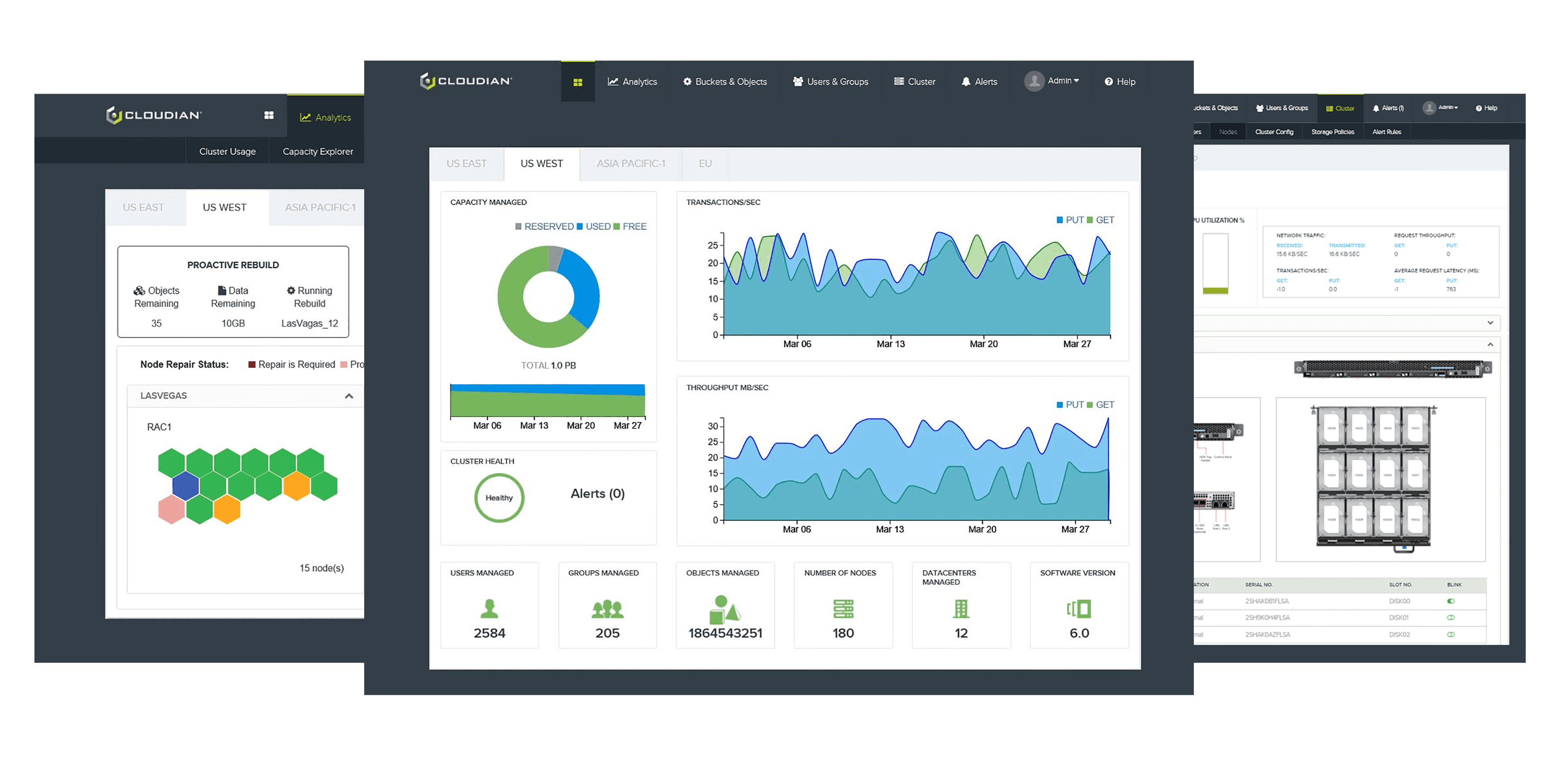

Cloudian was founded on the idea of bringing public cloud benefits to the data center, and we now have nearly 700 enterprise and service provider customers that have deployed our award-winning HyperStore object storage platform in on-prem and hybrid cloud environments. On-prem object storage can deliver public cloud-like benefits in your own data center, at less cost and with performance, agility, security and control advantages. In addition, as long as the object storage is highly S3-compatible, it can integrate easily with public cloud in a hybrid cloud model.

To learn more about how we can help you find the right cloud storage strategy for your organization, visit cloudian.com/solutions/cloud-storage/. You can also read about deploying HyperStore on-prem with AWS Outposts at cloudian.com/aws.

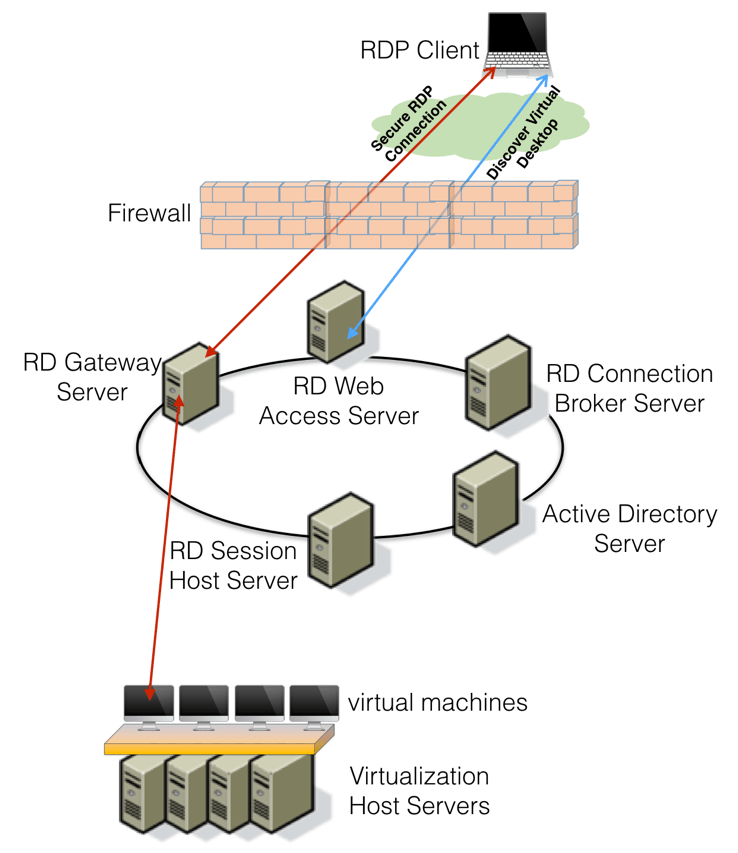

Grant Jacobson, Director of Technology Alliances and Partner Marketing, Cloudian

Grant Jacobson, Director of Technology Alliances and Partner Marketing, Cloudian

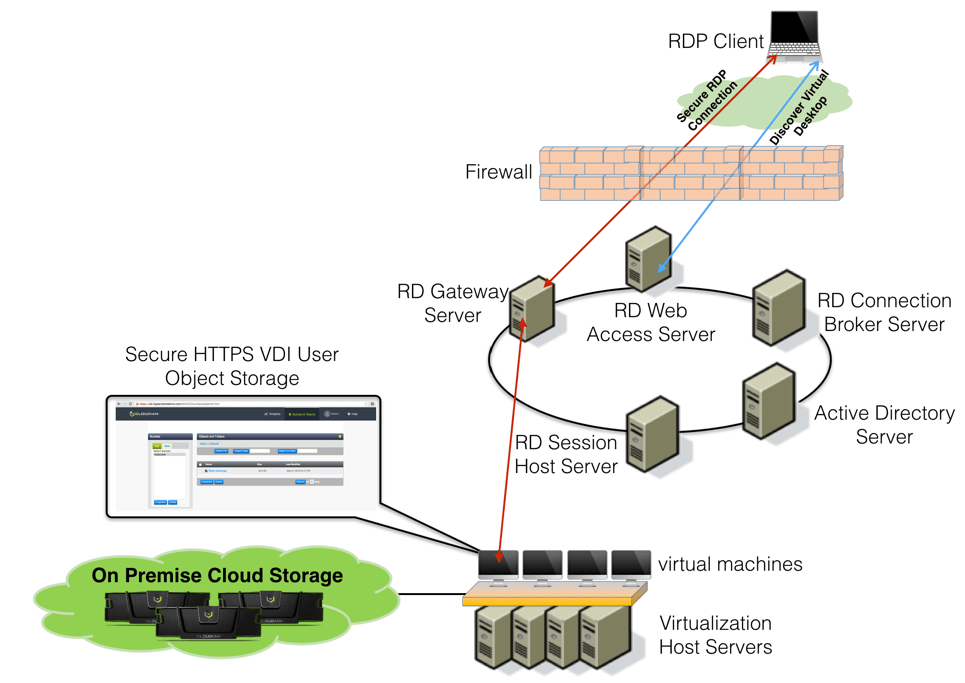

Amit Rawlani, Director of Solutions & Technology Alliances, Cloudian

Amit Rawlani, Director of Solutions & Technology Alliances, Cloudian

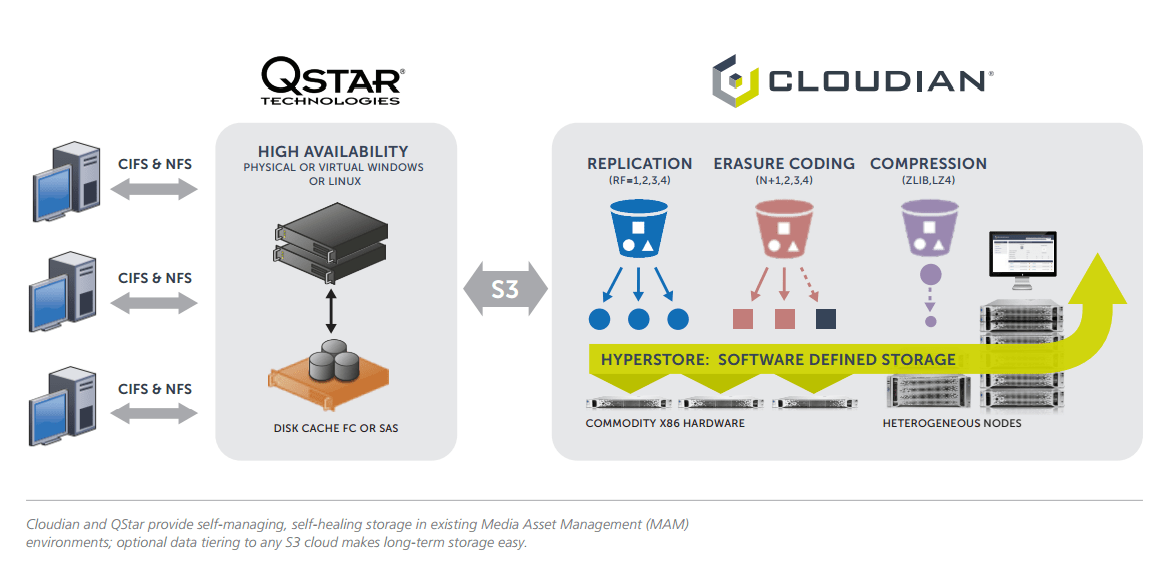

Check out this quick video we filmed live at NAB this year while catching up with QStar’s VP of Sales and Marketing, David Thomson:

Check out this quick video we filmed live at NAB this year while catching up with QStar’s VP of Sales and Marketing, David Thomson: